Many businesses struggle to efficiently manage fluctuating workloads in their cloud applications. Traditional scaling methods often lead to these problems: Overprovisioning wastes resources and increases costs, and Underprovisioning degrades application performance and customer experience.

That’s why Kubernetes, the most popular container orchestration platform, can manage and adjust to dynamic workloads with its auto-scaling capabilities! It dynamically adjusts the number of running pods based on real-time demand and helps you maintain optimal resource utilization. Additionally, you can manage infrastructure costs under your budget without overpaying!

So, what type of auto-scaling does Kubernetes offer? How can you use auto-scaling capabilities across your Kubernetes clusters? JUTEQ, the leading Cloud and AI Consulting Company is here to explain how you can utilize Kubernetes auto-scaling in your cloud-native environment!

The Purpose of Kubernetes Auto-Scaling:

Autoscaling in Kubernetes is a primary feature that dynamically adjusts the number of running application pods based on real-time demand. So, it automatically increases or reduces the number of running application instances across the Kubernetes clusters as per the changing workloads.

With Kibernetes Auto-Scaling, cloud applications can handle sudden workload spikes without impacting performance. This capability also helps with optimal resource utilization without requiring any manual intervention. So your applications run seamlessly without facing any downtime in the long run!

Types Of Auto-Scaling In Kubernetes

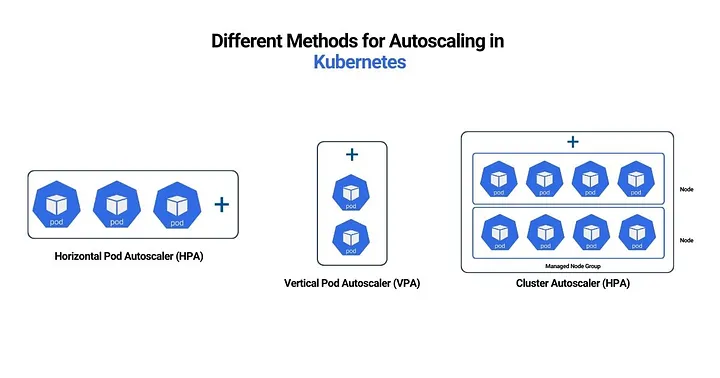

Kubernetes offers three types of autoscaling mechanisms, each serving different purposes and use cases. Let’s check them out one by one!

1. Vertical Pod Autoscaler (VPA)

The Vertical Pod Autoscaler (VPA) adjusts the CPU and memory requests of pod containers to match actual usage rather than relying on static resource allocations.

How Does It Work?

A VPA deployment consists of three components: the Recommender, which monitors resource utilization and computes target values; the Updater, which evicts pods needing updated resource limits; and the Admission Controller, which adjusts resource requests for new pods at creation time. Since Kubernetes doesn’t support changing resource limits of running pods, VPA must terminate and recreate pods with updated limits.

Use Cases

VPA is ideal for workloads with unpredictable or varying resource requirements. It can help avoid over-provisioning resources, which leads to cost savings, and ensure that applications have enough resources during peak usage. VPA can only work in recommendation mod

2. Horizontal Pod Autoscaler (HPA)

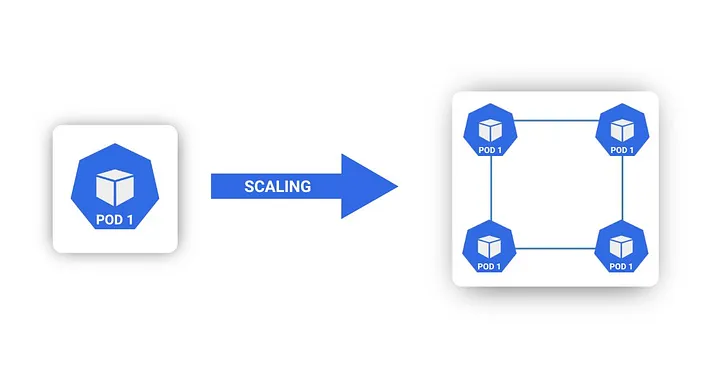

The Horizontal Pod Autoscaler (HPA) adjusts the number of pod replicas in response to changes in application demand. It is ideal for scaling stateless applications but can also support stateful sets.

How Does It Work?

The HPA controller monitors pod metrics, such as CPU utilization, to determine if it needs to adjust the number of replicas. For example, if a deployment targets 50% CPU utilization and the current average is 75%, HPA will add more replicas to balance the load.

Use Cases

The HPA is perfect for applications with fluctuating usage, such as web services with varying traffic loads. HPA requires a metrics source like the metrics server for CPU usage. For custom metrics, you need a service implementing the custom.metrics.k8s.io API. Combining HPA with cluster autoscaling can save costs by reducing the number of active nodes when demand decreases.

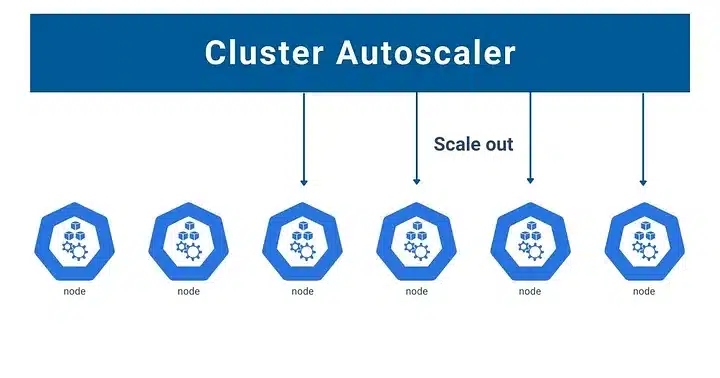

3. Cluster Autoscaler

While HPA manages the number of pods, the Cluster Autoscaler adjusts the number of nodes in a cluster based on resource needs.

How Does It Work?

The Cluster Autoscaler requires configuration specific to your cloud provider, including granting permissions to manage nodes. Check if the autoscaler is an option for managed Kubernetes services.

The autoscaler identifies unscheduled pods and decides whether adding a node would help. It also checks if nodes can be removed by rescheduling pods to other nodes, considering pod priority and disruption budgets.

Use Cases

The Cluster Autoscaler works on supported cloud platforms and needs secure credentials to manage infrastructure. It is ideal for dynamically scaling the number of nodes to match the current cluster utilization, helping manage cloud platform costs.

Best Tools & Approaches To Perform Kubernetes Autoscaling

Now that you know the main types of Kubernetes autoscaling mechanisms, here are the best practices you can follow to do it right!

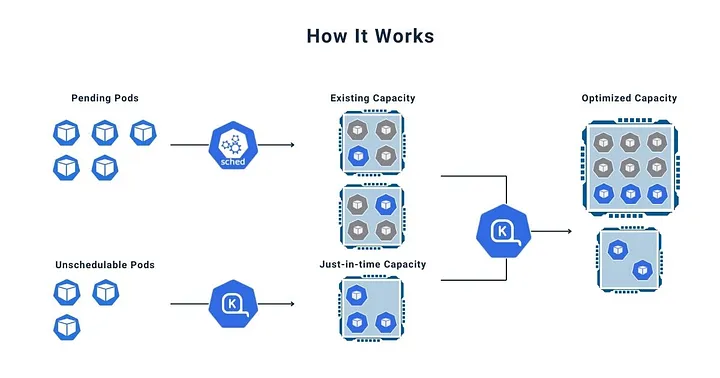

Consolidating Kubernetes Workloads With Karpenter:

Karpenter is a powerful tool designed to enhance Kubernetes auto-scaling capabilities.. As a CNCF project, Karpenter is not limited to just Azure Kubernetes Service (AKS) and is compatible with most cloud providers. It dynamically provisions nodes based on the requirements of your workloads. Unlike traditional auto-scaling methods, Karpenter considers factors such as pod resource requests and node constraints to make intelligent scaling decisions

How to Use It: Karpenter consolidates workloads by scheduling pods to minimize resource fragmentation. Thus, it reduces operational costs and simplifies cluster management. Karpenter can be configured to manage node scaling and optimize resource allocation, ensuring efficient performance.

Using Kubernetes Pod Disruption Budget (PDB)

Maintaining application stability during scaling events is crucial. The Kubernetes Pod Disruption Budget (PDB) allows you to define the maximum number of pods that can be unavailable during maintenance or scaling operations. By setting a PDB, you can ensure that critical applications remain available, even as Kubernetes dynamically adjusts resources. This feature is essential for businesses that prioritize uptime and reliability.

Use Kubectl Command To Scale Pod Down To 0

Sometimes, you might need to scale down specific deployments to zero to free up resources or manage costs. This approach is useful for temporarily removing pods without deleting their configurations, allowing efficient resource management.

Using the Kubectl scale command, you can adjust the number of replicas of a deployment to zero, optimizing resource usage. To scale down a deployment, use the following command:

kubectl scale deployment hello-world --replicas=0

Thus, this command helps to scale down the specified deployment to zero replicas!

Autoscaling Based on Cluster Size

For workloads that need to be scaled based on the cluster size (e.g., cluster-DNS or other system components), you can use the Cluster Proportional Autoscaler. This tool monitors the number of schedulable nodes and cores. It then scales the replicas of the target workload accordingly.

How to Use It: While the Cluster Proportional Autoscaler scales the number of replicas, the Cluster Proportional Vertical Autoscaler adjusts the resource requests for a workload based on the cluster’s number of nodes and cores. Both tools ensure your workloads are appropriately scaled as your cluster size grows or decreases.

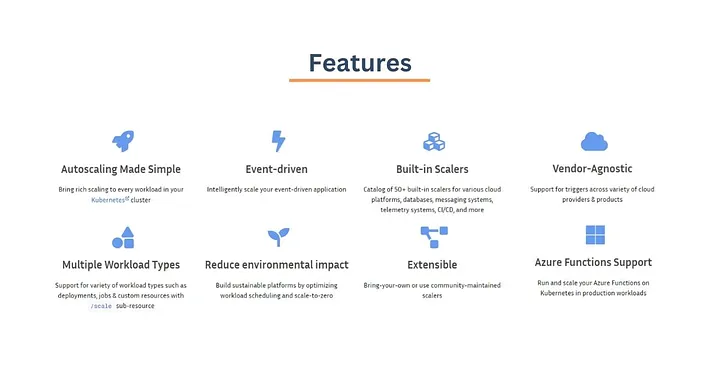

Consider Kubernetes Event-Driven Autoscaling (KEDA)

Scaling workloads based on events can be achieved using the Kubernetes Event Driven Autoscaler (KEDA). KEDA enables you to scale your workloads based on the number of events to be processed, such as the amount of messages in a queue.

How to Use It: KEDA supports various adapters for different event sources, allowing you to trigger scaling operations based on real-time data and event metrics. This approach is beneficial for applications that experience variable event loads.

Autoscaling Based on Schedules:

Scheduling scaling operations can reduce resource consumption during off-peak hours. Using KEDA with its Cron scaler, you can define schedules (and time zones) for scaling your workloads in or out.

How to Use It: By setting up Cron-based scaling rules, you can ensure that your applications scale according to predictable usage patterns, optimizing resource allocation during peak and off-peak times.

Implementing Auto-Scaling in Your Enterprise: The JUTEQ Way!

JUTEQ offers comprehensive Kubernetes Strategy & Consulting services to help you strategize the right approach for implementing autoscaling in your enterprise infrastructure.

Here’s how our certified Kubernetes Experts can help you integrate Kubernetes auto-scaling into your enterprise solutions:

- Strategic Planning and Assessment JUTEQ starts by assessing your infrastructure and application requirements. Our experts identify the best auto-scaling strategies that align with your business goals.

- Customized Configuration & Deployment: We customize the configuration of auto-scaling tools like AKS Karpenter to meet your specific needs. Our team handles the deployment process for a seamless integration with your existing Kubernetes environment.

- Continuous Monitoring and Optimization: Auto-scaling is not a set-and-forget solution. JUTEQ provides constant monitoring and optimization to ensure your auto-scaling strategies remain effective. We use real-time data to adjust scaling parameters, improve resource utilization, and maintain application stability.

Our tailored approach ensures that your cloud applications can fully utilize Kubernetes auto-scaling capabilities to provide maximum efficiency and scalability!

Ready to take the next step in your Kubernetes journey? Contact JUTEQ today!